Entropy change calculation is a cornerstone of thermodynamics, essential for understanding how energy transforms and systems evolve. This guide provides a comprehensive overview, suitable for advanced physics students and anyone interested in the concept of disorder and randomness. We’ll explore the principles of entropy, its mathematical formulations, and how to apply them to real-world scenarios.

On This Page

Read More

Understanding Entropy and Its Significance

Entropy (S) is a fundamental concept in thermodynamics, representing the degree of disorder or randomness within a system. It is a measure of the number of possible microstates that a system can have while adhering to its macroscopic properties. A higher entropy value indicates a greater degree of disorder, reflecting a more probable state. Understanding entropy is crucial for grasping the Second Law of Thermodynamics, which governs the direction of spontaneous processes.

Defining Entropy and Its Properties

Entropy is a state function, meaning its value depends only on the current state of the system, not on the path taken to reach that state. It is typically measured in Joules per Kelvin (J/K). The change in entropy (ΔS) for a reversible process is defined as the heat transfer (Q) divided by the absolute temperature (T) at which the process occurs. This relationship, ##ΔS = Q/T##, forms the cornerstone of entropy calculations.

The Second Law of Thermodynamics states that the total entropy of an isolated system can only increase over time or remain constant in ideal, reversible cases. This implies that spontaneous processes proceed in a direction that increases the total entropy of the universe. This increase in entropy is often associated with the conversion of energy from usable to unusable forms, such as heat.

Entropy in Different Systems

Entropy manifests differently in various systems. For example, in a gas, entropy increases with higher volume and temperature because the molecules have more space and energy to move randomly. In a solid, entropy is lower because the atoms are more ordered. Phase transitions, such as melting or boiling, involve significant entropy changes, as the substance transitions from an ordered to a more disordered state.

In a closed system, such as a container, entropy can increase due to processes like heat transfer or mixing. However, the total entropy of the universe (system plus surroundings) always increases in any real, irreversible process. This increase reflects the overall tendency toward disorder and the natural direction of spontaneous changes.

Furthermore, understanding entropy is vital in fields like cosmology, where it is used to understand the evolution of the universe, and in engineering, where it guides the design of efficient thermodynamic systems. The study of entropy is, therefore, essential for a comprehensive understanding of the natural world.

Calculating Entropy Change in Phase Transitions

Phase transitions (melting, freezing, boiling, condensation, sublimation, deposition) cause large entropy changes because the microscopic arrangement and accessible microstates of molecules change abruptly at the transition. For a reversible phase change at equilibrium temperature ##T_\text{tr}## (e.g., melting point, boiling point), the entropy change is proportional to the latent heat:

###\Delta S_\text{phase} \;=\; \dfrac{Q_\text{rev}}{T_\text{tr}} \;=\; \dfrac{L}{T_\text{tr}}###

Here ##L## is the latent heat (per unit mass or per mole, consistent with how you report ##\Delta S##). This formula follows directly from the Clausius definition of entropy for a reversible process (##dS = \delta Q_\text{rev}/T##). Because ##T_\text{tr}## is constant during a pure, equilibrium phase change, the integral simplifies to a ratio.

When to Use Which Expression

- Heating within a single phase: ###\Delta S = \displaystyle \int_{T_1}^{T_2} \frac{C_p(T)}{T}\, dT \approx C_p \ln\!\Big(\frac{T_2}{T_1}\Big)### if ##C_p## is roughly constant.

- Phase transition at ##T_\text{tr}##: ###\Delta S = \dfrac{L}{T_\text{tr}}### (use latent heat of fusion, vaporization, or sublimation).

- Compound paths: Add entropies for each reversible segment (heating/cooling + phase-change jumps).

Typical Values for Water (per kg)

| Transition | Latent Heat ##L## | Temperature ##T_\text{tr}## | ###\Delta S = \dfrac{L}{T_\text{tr}}### | Interpretation |

|---|---|---|---|---|

| Fusion (ice → liquid) | ##333.55\ \text{kJ/kg}## | ##273.15\ \text{K}## | ###\approx 1.22\ \text{kJ/K·kg}### | Entropy increases; liquid has more accessible microstates than ice. |

| Vaporization (liquid → vapor) | ##2256.9\ \text{kJ/kg}## | ##373.15\ \text{K}## | ###\approx 6.05\ \text{kJ/K·kg}### | Large increase; gas is far more disordered than liquid. |

| Condensation (vapor → liquid) | Same magnitude as vaporization | ##373.15\ \text{K}## | ###\approx -6.05\ \text{kJ/K·kg}### | Entropy decreases; heat must be rejected to the surroundings. |

Worked Examples (per kg unless noted)

Condensing 0.50 kg of steam at 100°C. ###\Delta S = \dfrac{-2256.9}{373.15}\times 0.50 \approx -3.03\ \text{kJ/K}### (negative; system loses entropy while the environment gains it).

Melting 1 kg of ice at 0°C. ###\Delta S = \dfrac{333.55}{273.15} \approx 1.22\ \text{kJ/K·kg}### (positive).

Boiling 1 kg of water at 100°C. ###\Delta S = \dfrac{2256.9}{373.15} \approx 6.05\ \text{kJ/K·kg}### (positive, much larger than fusion).

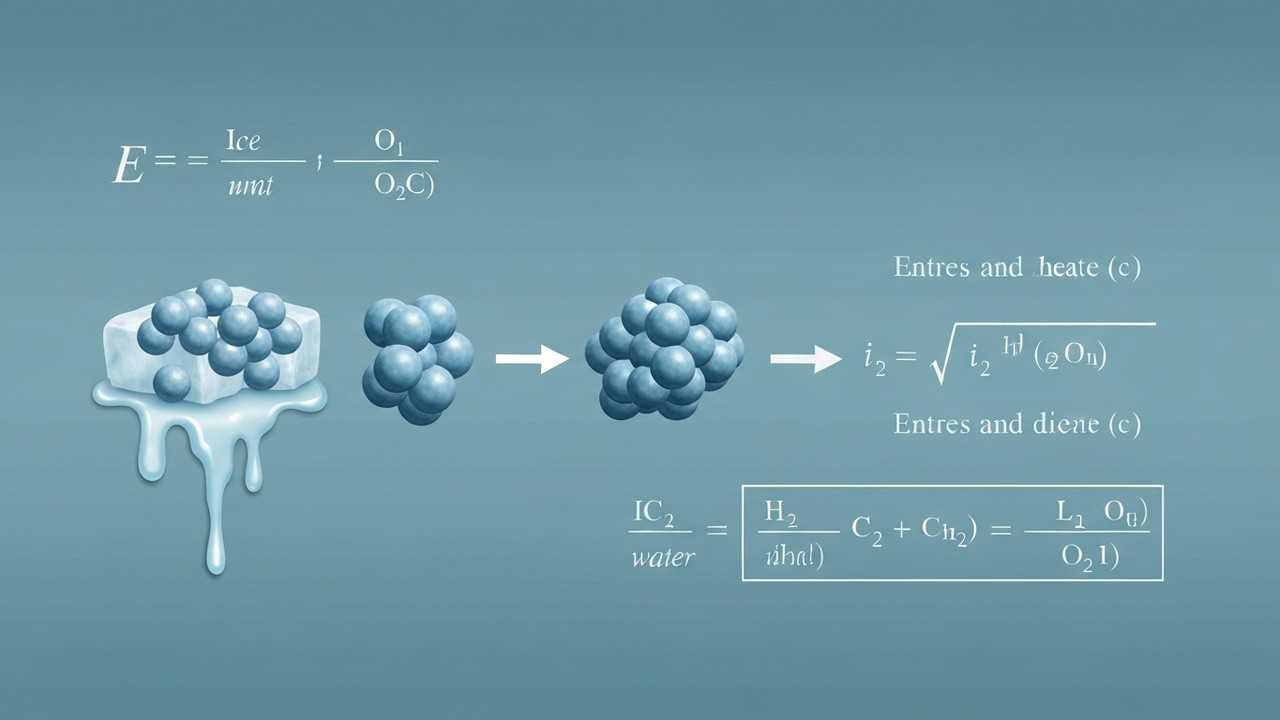

Melting Ice: A Practical Example

Consider the phase transition of melting ice. When ice melts into water at 0°C, the system absorbs heat (Q) without any temperature change. The entropy change (ΔS) during this process can be calculated using the formula ##ΔS = Q/T##, where Q is the heat absorbed during melting and T is the absolute temperature. This example illustrates how to entropy change calculation in a real-world scenario.

The latent heat of fusion (##L_f##) for ice is 334 kJ/kg. This means that 334,000 Joules of energy are required to melt one kilogram of ice at 0°C. The temperature at which the phase transition occurs is 273.15 K (0°C converted to Kelvin). To find the entropy change when 1 kg of ice melts, we use the formula ##ΔS = (mL_f)/T##, where m is the mass of the ice.

Step-by-step calculation

Let's calculate the entropy change when 1 kg of ice at 0°C melts to water at 0°C. First, we determine the heat absorbed (Q) during melting. The heat absorbed is given by ##Q = mL_f##, where m = 1 kg and ##L_f## = 334,000 J/kg. Therefore, ##Q = 1 kg * 334,000 J/kg = 334,000 J##.

Next, we use the formula ##ΔS = Q/T##. The temperature (T) is 273.15 K. Thus, ##ΔS = 334,000 J / 273.15 K ≈ 1222.7 J/K##. This positive value indicates that the entropy of the system increases during the melting process, as the ice transitions from an ordered solid state to a more disordered liquid state.

This calculation demonstrates the quantitative aspect of entropy change calculation, which is a critical concept in understanding the Second Law of Thermodynamics. It shows how a simple phase transition can be analyzed using thermodynamic principles, providing insight into the direction and spontaneity of physical processes.

Applications of Entropy Change Calculations

The concept of entropy change has wide-ranging applications across various scientific and engineering disciplines. From understanding the efficiency of heat engines to predicting the behavior of chemical reactions, the ability to calculate and interpret entropy changes is crucial. Let's explore some key applications.

Thermodynamic Efficiency and Entropy

In thermodynamics, entropy calculations are essential for evaluating the efficiency of heat engines and refrigerators. The Second Law of Thermodynamics dictates that real-world processes are irreversible, leading to an increase in entropy. This increase translates to a loss of available energy, reducing the efficiency of these systems. Analyzing entropy changes helps engineers design more efficient engines and refrigeration units.

The Carnot cycle, a theoretical thermodynamic cycle, provides the maximum possible efficiency for a heat engine operating between two temperatures. The efficiency of a Carnot engine is directly related to the entropy changes during the cycle. Real-world engines are less efficient due to irreversible processes like friction and heat loss, which increase entropy.

Chemical Reactions and Entropy

In chemistry, entropy changes are used to predict the spontaneity of chemical reactions. The Gibbs free energy (G), which combines enthalpy (H) and entropy (S), is a key factor in determining whether a reaction will occur spontaneously. The change in Gibbs free energy (##ΔG = ΔH - TΔS##) indicates the spontaneity of a reaction.

If ##ΔG## is negative, the reaction is spontaneous; if ##ΔG## is positive, the reaction is non-spontaneous under the given conditions. Understanding the entropy change (##ΔS##) in a chemical reaction is critical for predicting its spontaneity. This is particularly important in industrial processes, where optimizing reaction conditions is essential for efficiency and yield.

Key Takeaways

Understanding and calculating entropy changes is fundamental to the study of thermodynamics and its applications. We have explored the definition of entropy, its role in phase transitions, and its significance in various scientific and engineering fields. The ability to perform an entropy change calculation is crucial for analyzing and predicting the behavior of thermodynamic systems.

Review of Key Concepts

Entropy measures disorder, and entropy change (##ΔS##) is calculated as ##Q/T## for reversible processes. Phase transitions involve significant entropy changes, illustrated by the melting of ice. The Second Law of Thermodynamics states that the total entropy of an isolated system tends to increase, driving spontaneous processes.

Applications include assessing the efficiency of heat engines and predicting the spontaneity of chemical reactions. Mastering these concepts enables a deeper understanding of the natural world. The practical example of melting ice underscores the importance of entropy in everyday processes, showing how to quantify the degree of disorder.

Further Study

For further study, explore more complex thermodynamic systems, such as the behavior of gases under different conditions. Investigate the applications of entropy in information theory and cosmology. Continue to solve problems involving entropy changes to reinforce your understanding. Consider studying the concept of Gibbs free energy and its implications for chemical reactions.

| Concept | Description | Formula/Example |

|---|---|---|

| Entropy (S) | A measure of disorder or randomness in a system. High entropy indicates greater disorder. | N/A |

| Entropy Change (ΔS) | The change in entropy during a process. Positive ΔS indicates an increase in disorder. | ##ΔS = Q/T## (reversible process), ##ΔS = mL_f/T## (phase change) |

| Second Law of Thermodynamics | The total entropy of an isolated system tends to increase over time. | N/A |

| Melting Ice Example | Calculating the entropy change during the melting of ice at 0°C. | ##ΔS = (1 kg * 334,000 J/kg) / 273.15 K ≈ 1222.7 J/K## |

We also Published

RESOURCES

- 5.4: Calculating Entropy Changes - Chemistry LibreTexts

- calculating values for entropy change

- 5.5 Calculation of Entropy Change in Some Basic Processes

- Determination of the Magnetic Entropy Change from Magnetic ...

- Calculating Change in Entropy for a Process in which Energy is ...

- Entropy Calculations - A Level Chemistry Revision Notes

- Can one predict the entropy change for aqueous phase reactions ...

- 4.6 Entropy – University Physics Volume 2

- Standard Entropy - Symbols, Equations, Calculations, Applications ...

- Direct measurements of the magnetic entropy change

3 Comments