Big O notation, at its core, is a mathematical notation that describes the efficiency of algorithms. It provides a way to classify algorithms based on how their runtime or space requirements scale with input size. This understanding is critical in computer science for creating efficient and scalable systems.

On This Page

- What is Big O Notation? Unveiling Asymptotic Analysis

- Key Concepts in Big O Notation: Time and Space Complexity

- Practical Applications of Big O Notation: From Algorithms to Real-World Scenarios

- Similar Problems and Solutions

- Examples of Big O Problems with Solutions

- Problem 1: Finding the Maximum Element in an Array

- Problem 2: Searching for an Element in a Sorted Array

- Problem 3: Sorting an Array with Bubble Sort

- Problem 4: Finding the First Occurrence of a Repeated Character

- Problem 5: Calculating the Factorial of a Number

- Summary Table of Problems

- Key Takeaways: Mastering Big O Notation for Enhanced Efficiency

- We also Published

- RESOURCES

Read More

What is Big O Notation? Unveiling Asymptotic Analysis

In the realm of computer science and mathematics, Big O notation is a fundamental concept used to classify algorithms and mathematical functions by their efficiency. It provides a mathematical framework to describe how the runtime or space requirements of an algorithm scale as the size of the input grows. By abstracting away low-level details like hardware performance and implementation-specific optimizations, Big O notation enables a clear comparison between algorithms.

Definition of Big O

Formally, Big O notation expresses an upper bound on the growth rate of a function. If we say an algorithm has time complexity ##O(f(n))##, it means that its runtime will not grow faster than a constant multiple of ##f(n)## for sufficiently large input sizes ##n##.

Mathematically:

### \text{f(n) = O(g(n)) ⇔ ∃ c > 0, ∃ n₀ > 0 such that f(n) ≤ c·g(n) for all n ≥ n₀} ###

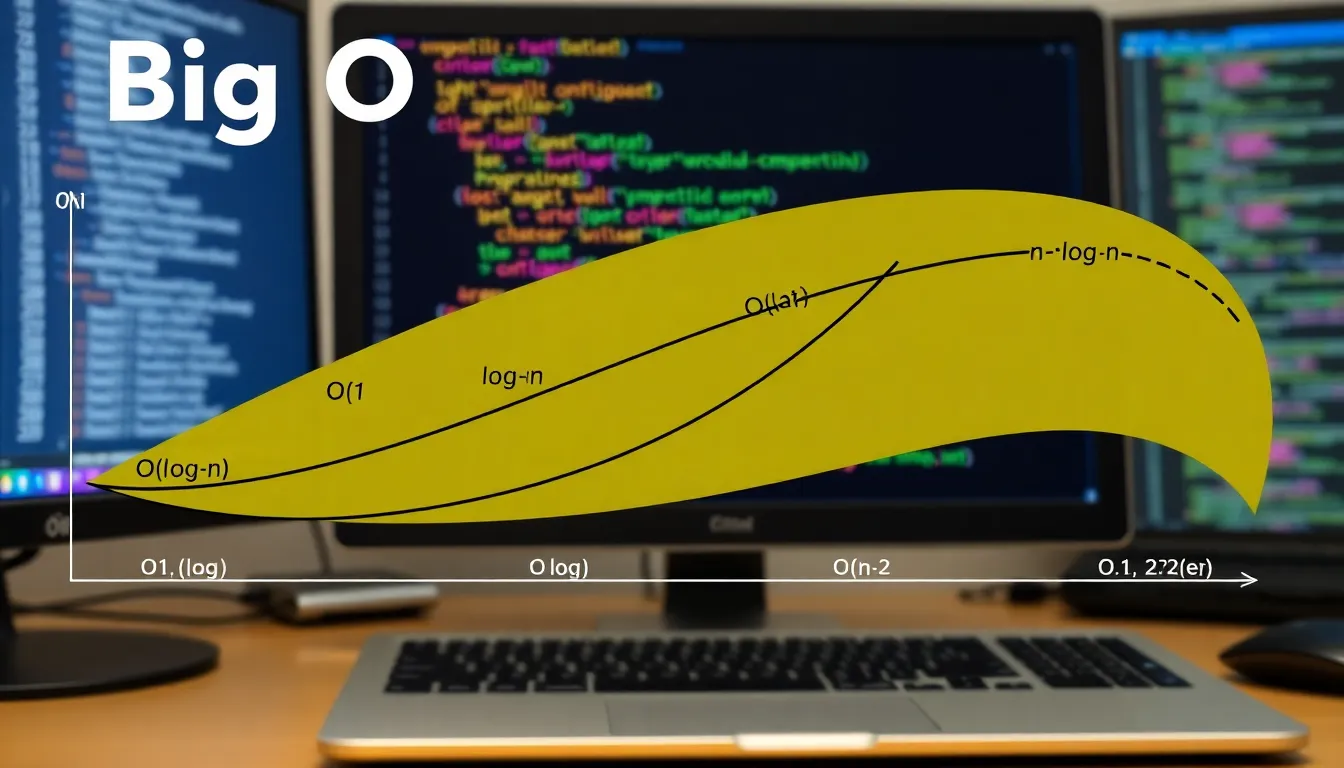

Common Complexities in Big O

Some frequently encountered complexities and their practical interpretations include:

| Big O Notation | Growth Rate | Example |

|---|---|---|

| O(1) | Constant time | Accessing an array element |

| O(log n) | Logarithmic time | Binary search |

| O(n) | Linear time | Traversing a list |

| O(n log n) | Linearithmic time | Merge sort, quicksort (average) |

| O(n²) | Quadratic time | Bubble sort, insertion sort |

| O(2ⁿ) | Exponential time | Solving subset problems by brute force |

Why Big O Matters

Understanding Big O helps software engineers and researchers to:

- Predict scalability of algorithms as inputs grow.

- Compare efficiency across different algorithmic approaches.

- Avoid bottlenecks that emerge with poor complexity (e.g., quadratic or exponential growth).

- Make informed design choices when optimizing code for speed and memory usage.

Practical Example

Consider searching for a value in a sorted array:

- Linear search: Checks each element one by one ⇒ ##O(n)##.

- Binary search: Halves the search space each step ⇒ ##O(log n)##.

The difference becomes significant when the array has millions of elements. A linear search may take millions of steps, while binary search needs only about ##\log_2 n## steps.

Summary Table

| Notation | Performance | Remarks |

|---|---|---|

| O(1) | Excellent | Independent of input size |

| O(log n) | Very good | Scales well even for large inputs |

| O(n) | Acceptable | Linear growth, manageable |

| O(n²) | Poor | Becomes impractical for large n |

| O(2ⁿ) | Terrible | Explodes with moderate input sizes |

In conclusion, Big O notation is not just a theoretical tool—it’s a lens that allows us to evaluate algorithms for real-world efficiency, scalability, and performance trade-offs.

Historical Context and Origins

The concept behind Big O notation can be traced back to the late 19th and early 20th centuries. Mathematicians like Paul Bachmann and Edmund Landau laid the groundwork for asymptotic analysis, which forms the basis of Big O. Their work focused on understanding the behavior of functions as their input values approached infinity or a specific limit. This historical context highlights the mathematical rigor that underpins Big O, making it a tool with a solid theoretical foundation. Understanding the history of Big O can also provide a deeper appreciation for its evolution and significance.

Formal Definition and Interpretation

Formally, Big O notation describes the upper bound of a function's growth rate. If we say that a function f(x) is O(g(x)), it means that f(x) grows no faster than g(x) as x approaches infinity. In other words, there exists a constant M and a value x₀ such that |f(x)| ≤ M|g(x)| for all x > x₀. This means the function f(x) is bounded above by a constant multiple of g(x) for sufficiently large values of x. This definition is crucial for understanding how Big O works.

Practical Implications for Algorithm Analysis

The practical implications of Big O notation are vast, especially in algorithm analysis. For instance, an algorithm with a time complexity of O(n) will take linear time, meaning the runtime grows proportionally to the input size (n). Conversely, an algorithm with O(n²) complexity will have a runtime that grows quadratically, making it less efficient for large datasets. This allows developers to predict how an algorithm will perform as the input scales. This insight is critical for choosing the most appropriate algorithms to handle large datasets efficiently.

Key Concepts in Big O Notation: Time and Space Complexity

Big O notation is a cornerstone in computer science, primarily used to assess the efficiency of algorithms. The two primary measures are time complexity and space complexity. Time complexity focuses on how the execution time of an algorithm scales with input size, while space complexity evaluates how the memory usage changes. These concepts are fundamental to understanding and comparing different algorithms.

Time Complexity Explained

Time complexity quantifies the amount of time an algorithm takes to complete as a function of its input size. It's expressed using Big O notation, which describes the upper bound of the algorithm's runtime. For example, an algorithm with O(n) time complexity implies the runtime increases linearly with the input size (n). Algorithms with O(1) time complexity are considered highly efficient as their runtime remains constant regardless of the input size. Understanding time complexity is critical for performance analysis and optimization.

Space Complexity Explained

Space complexity refers to the amount of memory an algorithm utilizes in relation to its input size. Similar to time complexity, space complexity is also expressed using Big O notation. For instance, an algorithm with O(n) space complexity means the memory usage grows linearly with the input size. Algorithms with O(1) space complexity are memory-efficient, using a constant amount of memory. Analyzing space complexity helps in optimizing memory usage and preventing memory-related issues.

Common Big O Notations and Their Meanings

Several common notations are frequently encountered in algorithm analysis. O(1) denotes constant time, O(log n) represents logarithmic time, O(n) signifies linear time, O(n log n) indicates linearithmic time, O(n²) describes quadratic time, and O(2ⁿ) denotes exponential time. Each notation represents a different growth rate, impacting the algorithm's performance as the input size increases. Understanding these notations is essential for assessing algorithm efficiency and making informed choices. Recognizing these patterns aids in quick performance assessments.

Practical Applications of Big O Notation: From Algorithms to Real-World Scenarios

Big O notation extends beyond theoretical analysis, finding practical application in various real-world scenarios. In database systems, it helps optimize query performance and index design. In software development, it informs the choice of data structures and algorithms to ensure efficient code execution. It is a key factor in designing scalable systems.

Analyzing Algorithm Efficiency

The core application of Big O notation lies in analyzing the efficiency of algorithms. By expressing the time and space complexity of an algorithm, developers can predict how it will scale with different input sizes. For example, knowing that a sorting algorithm has a time complexity of O(n log n) allows developers to anticipate its performance on large datasets. This analysis is crucial for selecting the most appropriate algorithms for various tasks. Big O helps in making informed choices to optimize performance.

Database Query Optimization

In database systems, Big O notation plays a vital role in optimizing query performance. Analyzing the complexity of database queries helps in identifying bottlenecks and improving efficiency. Developers can use Big O to assess the impact of indexes and query strategies on the overall performance. This ensures quick data retrieval and enhances system responsiveness. Effective query optimization is essential for handling large datasets.

Software Development Best Practices

In software development, Big O notation guides the choice of data structures and algorithms, promoting efficient code execution. Developers use Big O to evaluate the performance implications of various design decisions. This ensures that software can handle increasing workloads without performance degradation. Prioritizing efficient algorithms is a key aspect of writing robust and scalable software. Good design practices are informed by Big O principles.

Similar Problems and Solutions

To solidify your grasp of Big O notation, let's explore some related problems and their solutions, providing a practical perspective.

Examples of Big O Problems with Solutions

To better understand Big O notation and asymptotic analysis, let us look at five classic problems in computer science. Each problem will include the statement, solution approach, and time complexity analysis. These examples demonstrate how Big O classification helps us analyze the efficiency of algorithms.

Problem 1: Finding the Maximum Element in an Array

Problem: Given an array of n integers, find the maximum element.

Solution: Iterate through the array once, comparing each element with the current maximum value.

Time Complexity: ##O(n)## because we must check each element once.

def find_max(arr):

max_val = arr[0]

for num in arr:

if num > max_val:

max_val = num

return max_val

Problem 2: Searching for an Element in a Sorted Array

Problem: Search for a specific element in a sorted array.

Solution: Use binary search by dividing the search space in half each step.

Time Complexity: ##O(\log n)## because the array is halved at each iteration.

def binary_search(arr, target):

left, right = 0, len(arr) - 1

while left <= right:

mid = (left + right) // 2

if arr[mid] == target:

return mid

elif arr[mid] < target:

left = mid + 1

else:

right = mid - 1

return -1

Problem 3: Sorting an Array with Bubble Sort

Problem: Sort an array of n integers using bubble sort.

Solution: Repeatedly traverse the array, comparing and swapping adjacent elements until sorted.

Time Complexity: ##O(n^2)## because for each element we may compare it with every other element.

def bubble_sort(arr):

n = len(arr)

for i in range(n):

for j in range(0, n - i - 1):

if arr[j] > arr[j + 1]:

arr[j], arr[j + 1] = arr[j + 1], arr[j]

return arr

Problem 4: Finding the First Occurrence of a Repeated Character

Problem: Given a string, find the first character that repeats.

Solution: Use a hash map (dictionary in Python) to track counts of characters as you iterate.

Time Complexity: ##O(n)## because each character is processed once.

def first_repeated_char(s):

seen = set()

for ch in s:

if ch in seen:

return ch

seen.add(ch)

return None

Problem 5: Calculating the Factorial of a Number

Problem: Calculate the factorial of a given number n.

Solution: Use either recursion or an iterative loop to multiply numbers from 1 to n.

Time Complexity: ##O(n)## since we perform n multiplications.

def factorial(n):

if n == 0 or n == 1:

return 1

result = 1

for i in range(2, n + 1):

result *= i

return result

Summary Table of Problems

| Problem | Algorithm | Time Complexity |

|---|---|---|

| Find maximum element in array | Linear scan | O(n) |

| Search in sorted array | Binary search | O(log n) |

| Sort array | Bubble sort | O(n²) |

| First repeated character | Hash map lookup | O(n) |

| Factorial of number | Iteration/recursion | O(n) |

These examples highlight how different algorithms vary in efficiency. Understanding Big O analysis allows developers to select the best-suited algorithm for performance-sensitive applications.

Key Takeaways: Mastering Big O Notation for Enhanced Efficiency

In conclusion, Big O notation is a fundamental concept in computer science, providing a powerful framework for analyzing and comparing algorithms. Understanding the principles of Big O notation empowers developers to make informed decisions about algorithm selection, optimize database queries, and develop efficient software. By mastering Big O notation, you gain the ability to assess the performance of algorithms and optimize software applications. It also enables better problem-solving and system design.

| Big O Notation | Description | Example |

|---|---|---|

| O(1) | Constant Time | Accessing an element in an array by index |

| O(log n) | Logarithmic Time | Binary search in a sorted array |

| O(n) | Linear Time | Iterating through an array once |

| O(n log n) | Linearithmic Time | Efficient sorting algorithms like Merge Sort |

| O(n²) | Quadratic Time | Bubble sort, Insertion Sort |

| O(2ⁿ) | Exponential Time | Naive solutions to the Traveling Salesman Problem |

We also Published

RESOURCES

- Big O notation - Wikipedia

- Can someone explain to me Big O notation like I'm dumb? : r ...

- What is Big O Notation Explained: Space and Time Complexity

- Big O notation (with a capital letter O, not a zero), also called ...

- Big O Notation Tutorial - A Guide to Big O Analysis - GeeksforGeeks

- A Rubyist's guide to Big-O notation - Honeybadger Developer Blog

- Big-O Algorithm Complexity Cheat Sheet (Know Thy Complexities ...

- algorithm - What is the n in big-O notation? - Stack Overflow

- terminology - What do f(x) and g(x) represent in Big O notation ...

- functions - Big O Notation "is element of" or "is equal" - Mathematics ...

1 Comment